2024-12-15 [ENG]: How I use GenAI at work: MidJourney

Generative AI will certainly change the way we work. Today, however, it is difficult to predict how. Adoption of GenAI tools in universities is very complicated, for reasons that one could write a whole book. This adoption will happen, however. The purpose of this and subsequent posts is to report how I use selected GenAI tools today (end of 2024). Perhaps this description will be useful for others in the academy to try a particular tool. And maybe it will be useful to me too, when in a few years I will be able to look at old notes to see , “how it was at the beginning of the GenAI transformation”.

Today will be about one of my favorite tools: MidJourney

What is MidJourney?

MidJourney https://www.midjourney.com/ is an online tool designed to generate high-quality, creative images based on textual prompts. It can produce artwork that ranges from photorealistic photos to highly stylized, abstract images. Other tools with similar capabilities include DALL-E and Stable Diffusion. I, however, prefer MidJourney because of its more creative interpretations of text prompts (a very subjective evaluation).

How do I work with MidJourney?

The following description applies to a paid subscription.

Initially, prompts for MidJourney were sent via Discord, but an online editor https://www.midjourney.com/editor has been available for some time, which makes it easier to view, organize and work with the generated images.

The query can be parameterized, controlling variety, repeatability, certain technical paraemters of the image (aspect ratio). A list of parameters is available in the documentation at https://docs.midjourney.com/docs/parameter-list. The two I use most often are the personalization token (you can describe your preferences and later apply them to a group of prompts) and aspect ratio.

The basic query (imagine command) creates a new image, but among the avaliable commands (full list here https://docs.midjourney.com/docs/command-list) you can also find describe - a command that generates a text description to another image, very useful for style transfer, or blend for creative mixing of two images.

Once I have an idea of what I would like to paint, usually the process itself is very iterative. After some local internal brainstorming, I start modifying details, editing photos (yes, you can inpaint parts of photos, for example). On average, after 10 steps, I have a picture close to what I had in mind.

What I’m doing with MidJourney?

In my case, the three most frequent uses of Midjourney are slides/interludes for presentations, illustrations for comic strips, and seed materials for short videos.

Presentations:

In recent years I have been giving a lot of lectures and presentations that are closer to popularization than to an in-depth mathematical discussion of a single method. Often to break up blocks of such presentations I use graphics produced by MidJourney. After all, a picture can be worth 1,000 words, so a properly crafted graphic can quickly put the audience into the context of the example I will be talking about on the following slides.

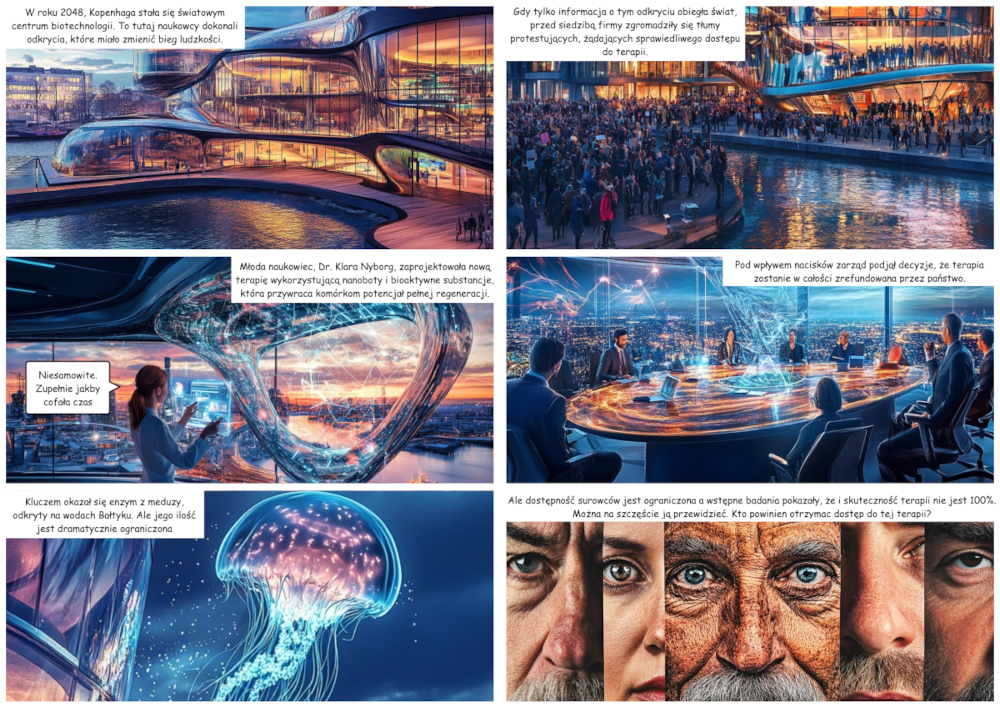

Comic strips:

As a hobby, I write comics that relate to the scientific research I do and sometimes the teaching classes I teach. Midjourney does a great job of generating graphics for these comics. Although illustrations drawn by experts have much more interesting and richer backgrounds and in many dimensions are more creative, but GenAI is great for prototyping and quicker exploration.

Short videos:

GenAI can do a great job of underplaying the voice for short videos (as I will write about with HeyGen). But watching a video where you can’t see a person’s face is kind of weird. So here, too, I use MidJourney’s ability to create graphics with faces modeled after actual people (here me, after all, I’m the one teaching the course in question) but with interesting background elements, such as a spacesuit when the video is about using explainable AI in space.

What I’m NOT doing with MidJourney?

There are, of course, areas in which I not only do not use MidJourney, but strongly discourage it, graphics for research papers and transferring the style of living artists.

Research papers. Graphics can captivate our imagination, but it is in scientific papers that critical thinking must be activated. There are already examples in the literature when realistic but untrue and incorrect diagrams were put in articles (see https://www.frontiersin.org/journals/cell-and-developmental-biology/articles/10.3389/fcell.2023.1339390/full). For me, scientific articles are an area with too much responsibility to let GenAI in on autopilot.

Style transfer. Artists spend years working to develop their unique style, so it is quite unethical to imitate their style without permission. This applies to contemporary artists. The works of those who died years ago are already in the public domain.

And how do you use Midjourney?

Let me know in the comments.